I wanted to share the applications and services running in my homelab, but I realized that understanding my network configuration is essential for grasping the complete picture. This blog post serves as a necessary introduction before diving into the specifics of my homelab setup.

To keep this post focused, I won’t try to list every service running in my homelab — that’s for another post. Instead, I’ll walk through a few unique configuration details, such as where this site is hosted and how external ingress reaches my network.

10 feet view

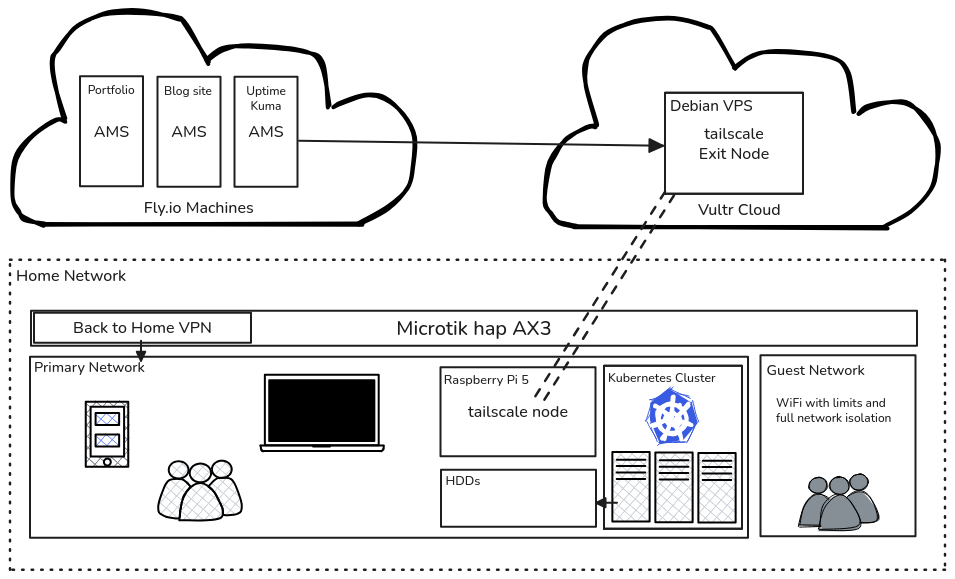

This picture represents my setup as of writing this blog, it may change as I have some plans for more changes in future.

At a high level - I have three main components: my home network, a Vultr Cloud Server and some containerized applications on fly.io

Fly.io - Cloud

I applied for a role at Fly.io but wasn’t selected due to a profile mismatch. It was still a great experience, and they gave me a $500 USD lifetime credit on my account — I still have a couple hundred dollars left.

On Fly.io, I host this blog, my portfolio website, and an instance of Uptime Kuma that monitors my external services. You can check the status of everything at https://status.coder3101.com; the Kuma instance is configured to send alerts to my Telegram if anything goes wrong.

One nice thing about hosting on Fly.io is that apps can scale down to zero and then start up quickly on demand — they use lightweight Firecracker microVMs which helps keep resource use (and cost) low. That makes Fly a good fit for my blog and portfolio, which don’t need to run constantly. I keep Uptime Kuma running all the time, though, because I want a continuous way to monitor my services.

Vultr - Cloud

It’s a bit ironic — I work at DigitalOcean and have an employee account, yet I run a small VPS on a competitor (Vultr). It’s cheap — under $5/month — so it’s not a big expense; while I could save that by using DigitalOcean, I find it useful to try a competitor’s offering firsthand to see how their features and workflow compare.

This VPS acts as a Tailscale exit node for my VPN and, because all my devices are on the same Tailscale network, it also doubles as an ingress point to reach my homelab services from anywhere. My Uptime Kuma instance on Fly.io monitors the VPS, and the VPS runs a small service I wrote called ping-checker. Ping-checker is a tiny HTTP server that pings a specified endpoint and translates the ping result into an HTTP response. Uptime Kuma sends requests to the VPS to confirm the node is reachable, and ping-checker performs a connectivity check from the VPS back to a homelab server on the same Tailscale network. So, I get notified if either VPS or home network is down.

I can get false positive notifications if the VPS is down but the home network is up, this would be very rare but I would see both alerts fire at once, also I don’t want to setup any other ingress points as of now.

Home Network

ISP and Router

Finally, my home network: a gigabit fibre link with a VSOL ONU and a Mikrotik hap AX3 router. The connection is behind CGNAT (carrier-grade NAT), so I don’t have a publicly routable IPv4 address and can’t host services directly from home. Instead I use the VPS as my ingress point to reach homelab services from anywhere.

My ISP does hand out an IPv6 /64 that I could use with DDNS, but that would only be reachable over IPv6, so I’m not using that option for now.

For Management purposes, a wireguard back to home VPN is configured on the Mikrotik router, which allows me to remotely manage the router from anywhere and also reach home network in case my VPS or tailscale is down. It uses mikrotik relays and IPv6 with DDNS connectivity so it’s a bit slow and that’s why its the backup.

The home network is split into two subnets: a private “home” subnet for devices that need access to local services and the internet, and a “guest” subnet for devices that only require internet access. Guest devices are isolated — they cannot reach home network services or even ping each other. Instead of using VLANs, I enforce this isolation with MikroTik’s advanced firewall rules. The router also supports a Single SSID / multiple-passphrase feature, which lets me advertise one SSID but assign different passwords to place devices on different networks.

The Home Network

It’s a small cluster made up of a few mini‑PCs and a Raspberry Pi, all joined to my Tailscale network. The mini‑PCs are linked over 1 Gbps switches and form a Kubernetes cluster, with a separate NAS providing storage. I run roughly twenty self‑hosted services on that cluster — I’ll outline them in my next post, so stay tuned.

TLS certificates are managed by cert-manager (using acme.sh as an ACME client) and are renewed automatically. Domains and DNS records are handled through Cloudflare. For example, my n8n instance is reachable within my home network at n8n.internal.coder3101.com.